- #SPLUNK ADD A FILE MONITOR INPUT TO SEND EVENTS TO THE INDEX HOW TO#

- #SPLUNK ADD A FILE MONITOR INPUT TO SEND EVENTS TO THE INDEX MANUAL#

#SPLUNK ADD A FILE MONITOR INPUT TO SEND EVENTS TO THE INDEX MANUAL#

#SPLUNK ADD A FILE MONITOR INPUT TO SEND EVENTS TO THE INDEX HOW TO#

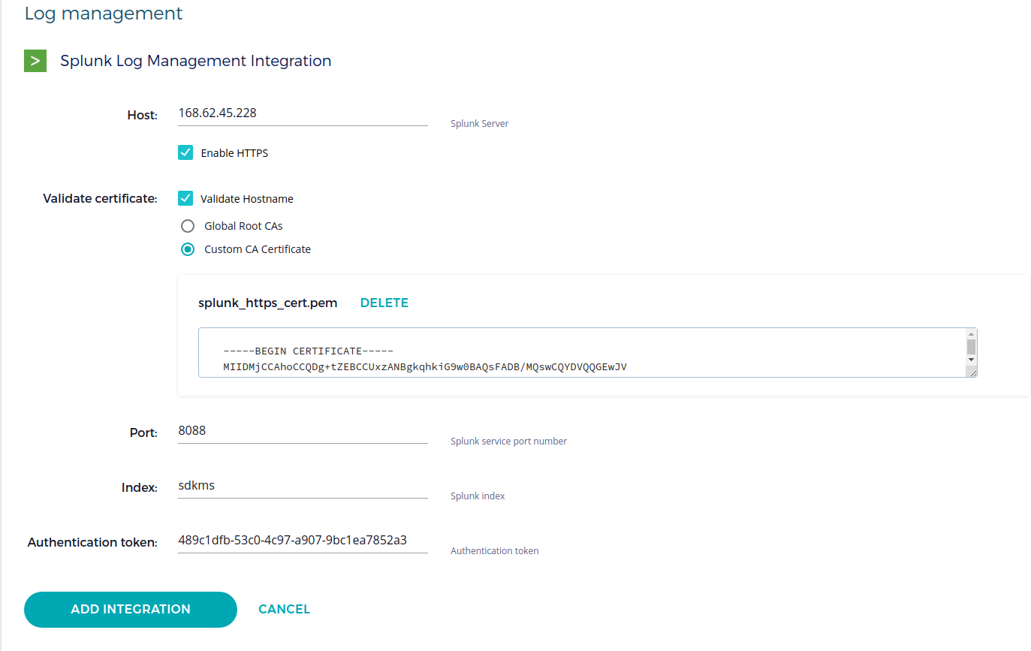

How To Install Istio Service Mesh on OpenShift 4.We have 4 fields–they are labeled: host, source, sourcetype, and component. You can then use these lookup configurations to add enriched fields in your event data. Grant Users Access to Project/Namespace in OpenShiftĬonfigure Chrony NTP Service on OpenShift 4.x / OKD 4.x Refer to OpenShift documentation for more details on Cluster Logging. This might not be the Red Hat recommended way of Storing OpenShift Events and Logs. Splunk options splunk-gzip, optional, Enable/disable gzip compression to send events to Splunk Enterprise or Splunk Cloud instance. Login to Splunk and check if Logs, Events and metrics are being send. Oc adm policy add-scc-to-user privileged -z $sa You can validate installation by checking available version of helm. Install and Use Helm 3 on Kubernetes Cluster If you don’t have helm already installed in your workstation or bastion server checkout the guide in below link. In the next page permit the token to write to the two indexes we created. Select “HTTP Event Collector” then fill in the name and click next. This is done under Data Inputs configuration section. As HEC uses a token-based authentication model we need to generate new token. The HTTP Event Collector (HEC) lets you send data and application events to a Splunk deployment over the HTTP and Secure HTTP (HTTPS) protocols. The Input Data Type Should be Events.įor Metrics Index the Input Data type can be Metrics.Ĭonfirm the indexes are available.

The nf entry reads as below: monitor://C:\UsersOne for logs and events and another one for Metrics.Ĭreate events and Logs Index. Added a monitor command in the nf file at the path C:\Program Files\SplunkUniversalForwarder\etc\apps\SplunkTAwindows\local. You will need at least two indexes for this deployment. This modular input makes an HTTPS request to the GitHub Enterprise's Audit Log REST API endpoint at a definable interval to fetch audit log data. The actual implementation will be as shown in the diagram below. Support for modular inputs in Splunk Enterprise 5.0 and later enables you to add new types of inputs to Splunk Enterprise that are treated as native Splunk Enterprise inputs.

can be an entire directory or just a single file.

monitor://, whenever the file content changes the logs are monitored and sent for indexing.

One DaemonSet on each OpenShift node for metrics collection. Here you need not specify intervals like 5m/ 30 min.Deployment for collecting changes in OpenShift objects.There will be three types of deployments on OpenShift for this purpose. An HEC token used by the HTTP Event Collector to authenticate the event data.Working OpenShift Cluster with oc command line tool configured.Ultimate Openshift (2021) Bootcamp by School of Devops Setup Requirementsįor this setup you need the following items.

Practical OpenShift for Developers – New Course

0 kommentar(er)

0 kommentar(er)